April 15, 2022

Regardless of all its drawbacks and outdated technological process, video content in the SDR (Standard Dynamic Range) format still holds the leading position in the media market today. HDR (High Dynamic Range) format is only just beginning its expansion.

In this article, we will consider the difference between SDR and HDR and examine the main HDR standards, their identification and validation for H.264/AVC, H.265/HEVC, VP9 and AV1. This article will be useful for QA engineers, application developers, OEM-manufacturers and SOC-designers who want to implement or identify HDR content.

Differences between HDR and SDR

Colorimetric parameters

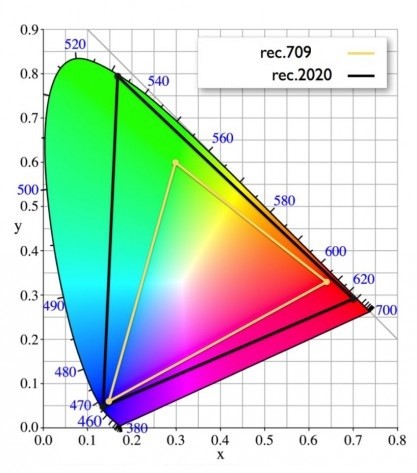

SDR format is based on the colorimetric parameters described in Rec. ITU-R BT.709. They cover only 35.9% of the spectrum visible to the human eye of the CEI 1931 system (Figure 1). HDR by contrast uses color parameters Rec. ITU-R BT.2020, covering 75.8% of the spectrum.

Figure 1. Color space of the CEI 1931 system.

Color depth

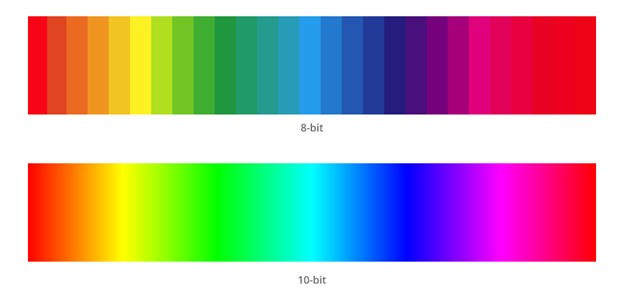

SDR has a color depth of 8 bits. SDR does not prohibit the use of 10 bits, but in practice the vast majority of video content is compressed at а color depth of 8 bits. This means that each of the primary colors — red, green and blue — can have 28 values, equal to 256, or a total number of 256x256x256 = 16,777,216 colors. This is a large number, but the human eye sees many more, therefore, in practice, it distinguishes aliased transitions in SDR videos (Figure 2). This is especially noticeable on gradient background scenes, such as the sky.

Figure 2. Color depth of 8 and 10 bits.

The minimum color depth in HDR is 10 bits: 1024 possible values for each primary color, or 1 073 741 824 colors in total, which is 64 times more than SDR. Such images are much closer to reality, however, under certain circumstances, the human eye is still able to notice aliasing in color transitions.

Luminance

The human eye is structured in such a way that, in addition to the color components, it also distinguishes the luminance or luma component, which is perceived much more intensively. SDR is limited to 100 cd/m2 luminance, while HDR standards theoretically achieve 10,000 cd/m2.

In practice, HDR-supporting displays in the mid-price segment claim a luminance of 1,000 cd/m2, while the premium segment offers a luminance of up to 4,000 cd/m2, but only for certain scenes and for a short time.

HDR standards

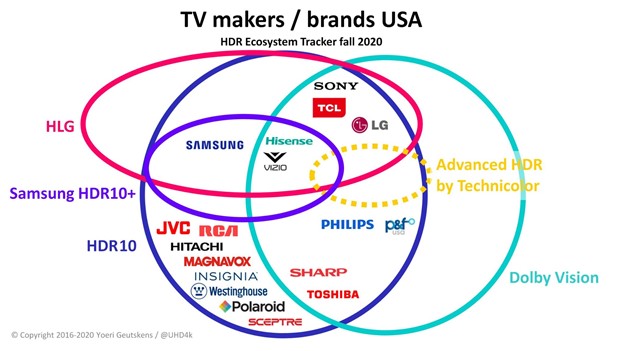

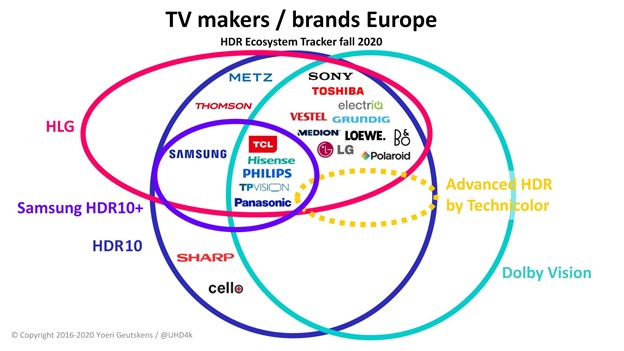

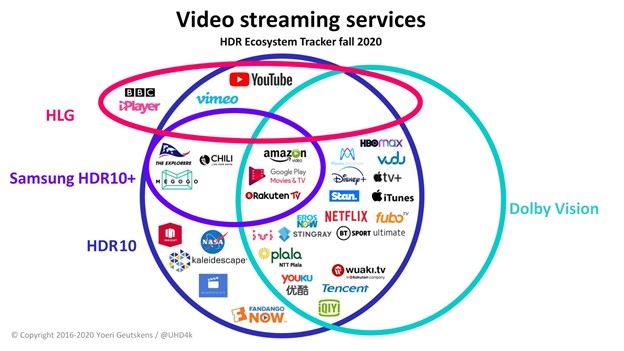

It should be noted that the term HDR is an umbrella term, since there are several HDR implementation standards from different vendors on the market. The most widely used are the four HDR standards: HDR10, HLG, HDR10+ and Dolby Vision. Figures 3.1 and 3.2 show the brands of HDR-supporting TV manufacturers, and Figure 4 shows the current HDR-supporting streaming services.

Figure 3.1. HDR TV brands in the USA.

Figure 3.2. HDR TV brands in Europe.

Figure 4. HDR-supporting streaming services.

To play HDR content, you need properly prepared content that conforms to the standard, as well as an HDR-supporting decoder and display.

With StreamEye you can check parameters at the elementary stream level, while Stream Analyzer helps to verify parameters both at the elementary stream and the media container levels for files. The upcoming release of Boro will help identify HDR format and present its metadata for live streams.

HDR10

The standard was adopted in 2014. HDR10 has gained wide acceptance due to its ease of use and absence of license fees. The standard describes video content that complies with the recommendations in UHDTV Rec. ITU-R BT.2020:

| Resolution | Up to 7680 × 4320 (8K) pixels |

| Aspect ratio | 16:9 |

| Pixel ratio | 1:1 |

| Scanning | Progressive |

| Frame rate (fps) | Up to 120 |

| Color depth | 10–12 bit |

| Colorimetric parameters | BT. 2020 |

| Luminance | Up to 10 000 cd/m2 in brightly lit scenes, in dark scenes up to 0.0001 cd/m2 |

HDR10 is based on the PQ EOTF transmission function, which is why such video content is not compatible with SDR displays. Also, HDR10 has a single layer of video content.

The standard employs static metadata that is applied to the entire video sequence. On the one hand, static implementation simplifies the implementation. At the same time, it does not take into account the need for different tones for static and dynamic, bright and dark scenes, so that the application of global compensation methods is required. Thus, HDR10 is not able to fully convey the author’s ideas and vision.

HDR10 metadata includes mastering display colour volume and content light level information.

Mastering display colour volume is the display parameters that were used to create video content and are considered to be reference parameters. When playing video content, the display will be readjusted in relation to the reference.

Mastering display colour volume describes:

- Display_primaries, X and Y coordinates of the three primary chrominance components;

- White_point, X and Y coordinates of the white point;

- Max_display_mastering_luminance, the nominally maximum luminance of the mastering display in units of 0.0001 cd/m2;

- Min_display_mastering_luminance, the nominally minimum luminance of the mastering display in units of 0.0001 cd/m2.

Content light level information — the value of the upper limit of the nominal target luminance level of the images. It includes:

- Content light level information (MaxCLL), indicates the upper limit of the maximum pixel luminance level in cd/m2;

- Max_pic_average_light_level (MaxFALL), specifies the upper limit of the maximum average luminance level of the whole frame in cd/m2.

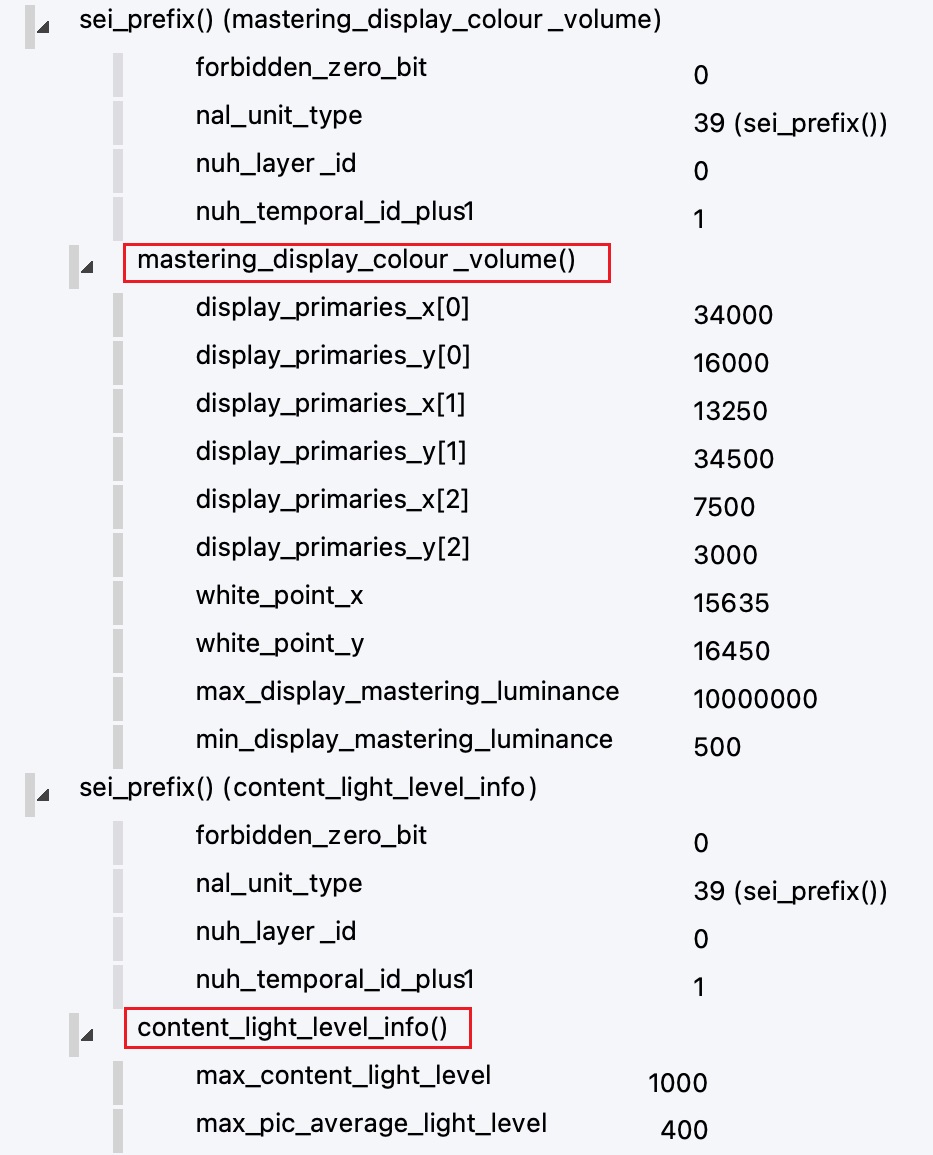

In H.264/AVC and H.265/HEVC video formats, HDR10 metadata can be specified at two levels.

- At the elementary video stream level in the corresponding SEI-headers of the IDR access block. Figure 5 shows an example of SEI Mastering display colour volume and Content light level information for an HEVC video sequence: maximum nominal luminance 1,000 cd/m2, minimum nominal luminance 0.05 cd/m2, MaxCLL 1,000 cd/m2, MaxFALL 400 cd/m2, and also the coordinates of the chromaticity components and the white point.

- At the MP4 media container level: mdcv (Mastering display colour volume) and clli (Content light level) boxes;

- At the MKV/WebM media container level: SmDm and CoLL boxes.

Figure 5. SEI messages: Content light level and Mastering display colour volume.

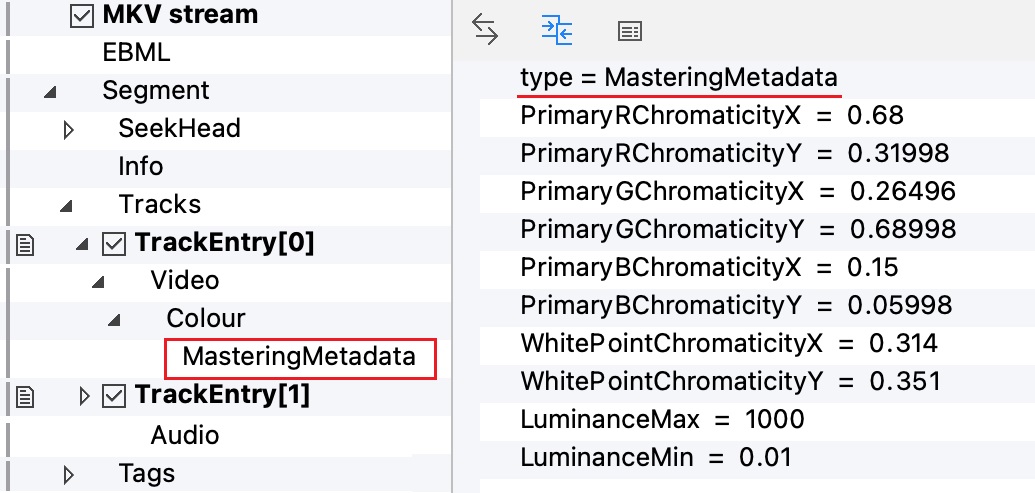

VP9 carries data at the media container level:

- MKV/WebM: SmDm (Mastering Display Metadata) and CoLL (Content Light Level) (Figure 6);

- MP4: mdcv and clli boxes.

Figure 6. Mastering Metadata for VP9 video sequence.

AV1 carries metadata:

- at the elementary video stream level, and signals it using the OBU syntax (metadata_hdr_mdcv and metadata_hdr_cll);

- at the MP4 media container level: mdcv and clli boxes;

- at the MKV/WebM media container level: SmDm and CoLL boxes.

HLG

The HLG standard appeared in 2015 and has also been widely adopted. The standard describes video content that conforms to BT.2020.

| Resolution | Up to 7680 × 4320 (8K) pixels |

| Aspect ratio | 16:9 |

| Pixel ratio | 1:1 |

| Scanning | Progressive |

| Frame rate (fps) | Up to 120 |

| Color depth | 10–12 bit |

| Colorimetric parameters | BT. 2020, BT. 2021, BT.709 |

| Luminance | Up to 1000 cd/m2 in brightly lit scenes, in dark scenes up to 0.0001 cd/m2 |

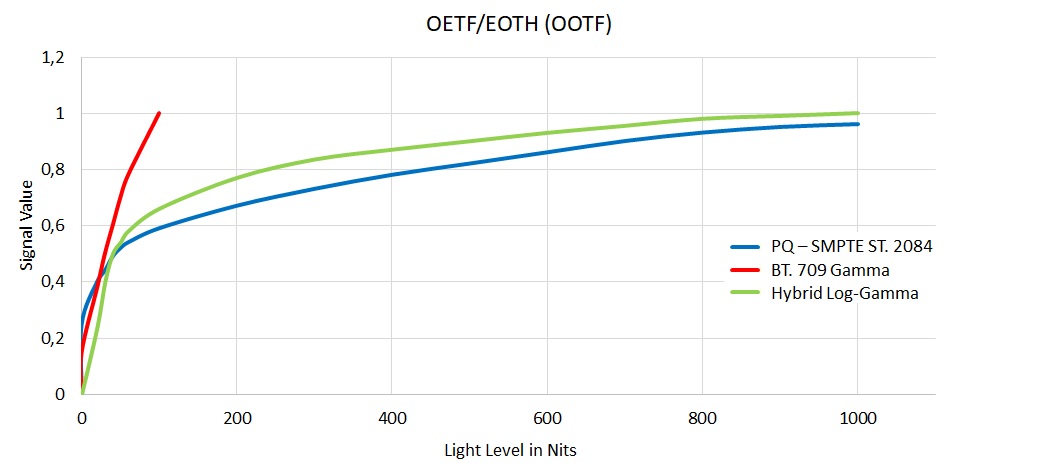

HLG, like HDR10, carries one layer of video content. Unlike HDR10, HLG does not have metadata, since it uses the HLG EOTF hybrid logarithmic function, partly repeating the SDR function curve, partly HDR (Figure 7). Such an implementation theoretically allows HLG to be played on both PQ EOTF (HDR10, HDR10+, Dolby Vision) and SDR displays with colorimetric parameters conforming to BT.2020. In terms of the degree of realism, HLG, like HDR10, is not able to fully convey the ideas and vision of the author. And due to the peculiarities of the HLG EOTF function, changes in hue may be noticeable on an SDR display if the images contain bright areas of saturated color. As a rule, distortion is observed in scenes with specular flares.

Figure 7. HLG curve relative to SDR and PQ HDR

An HLG video stream can be identified by the Transfer_characteristics parameter, which will have the value 14 or 18.

For H.264/AVC and H.265/HEVC, the parameter can be specified:

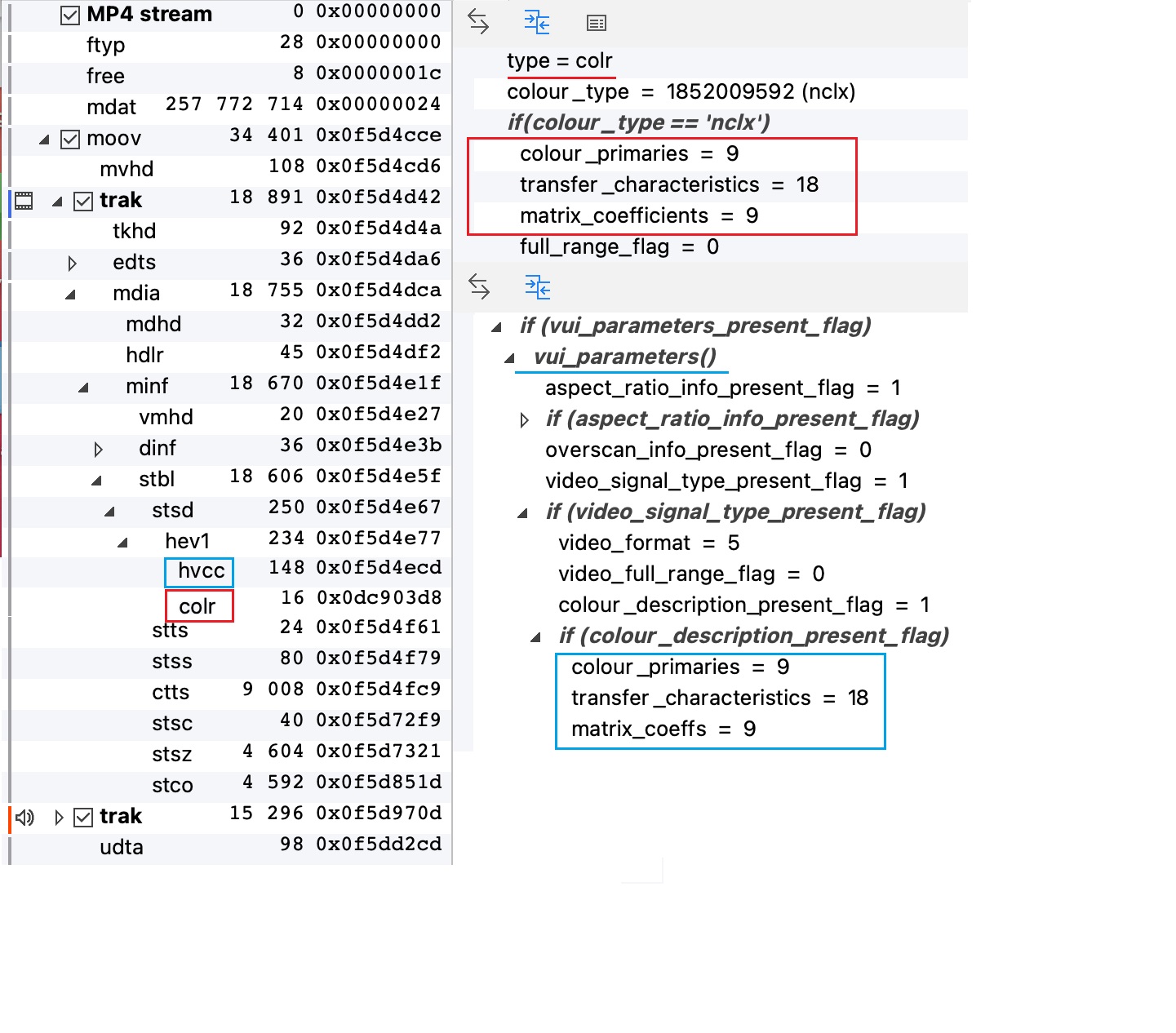

- at the MP4 media container level: in avcc, hvcc or colr boxes (Figure 8);

- at the MKV/WebM media container level in the corresponding TrackEntry video and colour box;

- at the elementary stream level in SPS headers → VUI → video_signal_type_present_flag →colour_description_present_flag → Transfer_characteristics (Figure 8);

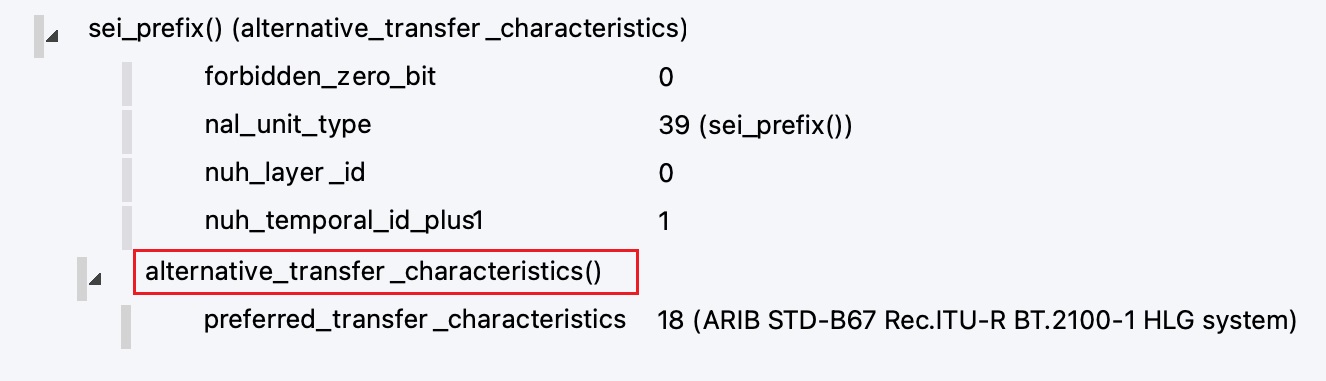

- SEI message Alternative transfer characteristics, located in the IDR access block at the elementary stream level. The message contains the parameter referred_transfer_characteristics = 18 (Figure 9). If there is a discrepancy between the values in SEI, VUI or media container, the SEI value is given priority.

For VP9, the parameter can be specified at the media container level:

- MP4: in vpcc and сolr boxes;

- MKV/WebM: colour box.

For AV1, the parameter can be specified:

- at the elementary stream level in the OBU Sequence Header → color_config → if (color_description_present_flag) → Transfer_characteristics;

- at the MP4 media container level in avc1 and colr boxes;

- at the MKV/WebM media container level in the corresponding TrackEntry video and colour box.

Figure 8. Part of SPS VUI parameters from AvcС and color boxes of the MP4 media container.

Figure 9. SEI message Alternative transfer characteristics.

HDR10+

The standard also describes video content that complies with UHDTV BT.2020.

| Resolution | Up to 7680 × 4320 (8K) pixels |

| Aspect ratio | 16:9 |

| Pixel ratio | 1:1 |

| Scanning | Progressive |

| Frame rate (fps) | Up to 120 |

| Color depth | 10–16 bit |

| Colorimetric parameters | BT. 2020 |

| Luminance | Up to 10 000 cd/m2 in brightly lit scenes, in dark scenes up to 0.0001 cd/m2 |

HDR10+ uses PQ EOTF and is therefore incompatible with SDR displays.

Unlike HDR10, HDR10+ uses dynamic metadata, which allows more efficient editing of each scene during mastering, thereby entirely conveying the author’s ideas. During content playback, the display is rearranged from scene to scene in the same way as the author created it.

HDR10+ offers backward compatibility with HDR10. In case the display does not support HDR10+ dynamic metadata, but supports HDR10 static metadata, and such data is present in the stream or media container, the display can play back the HDR10 video sequence.

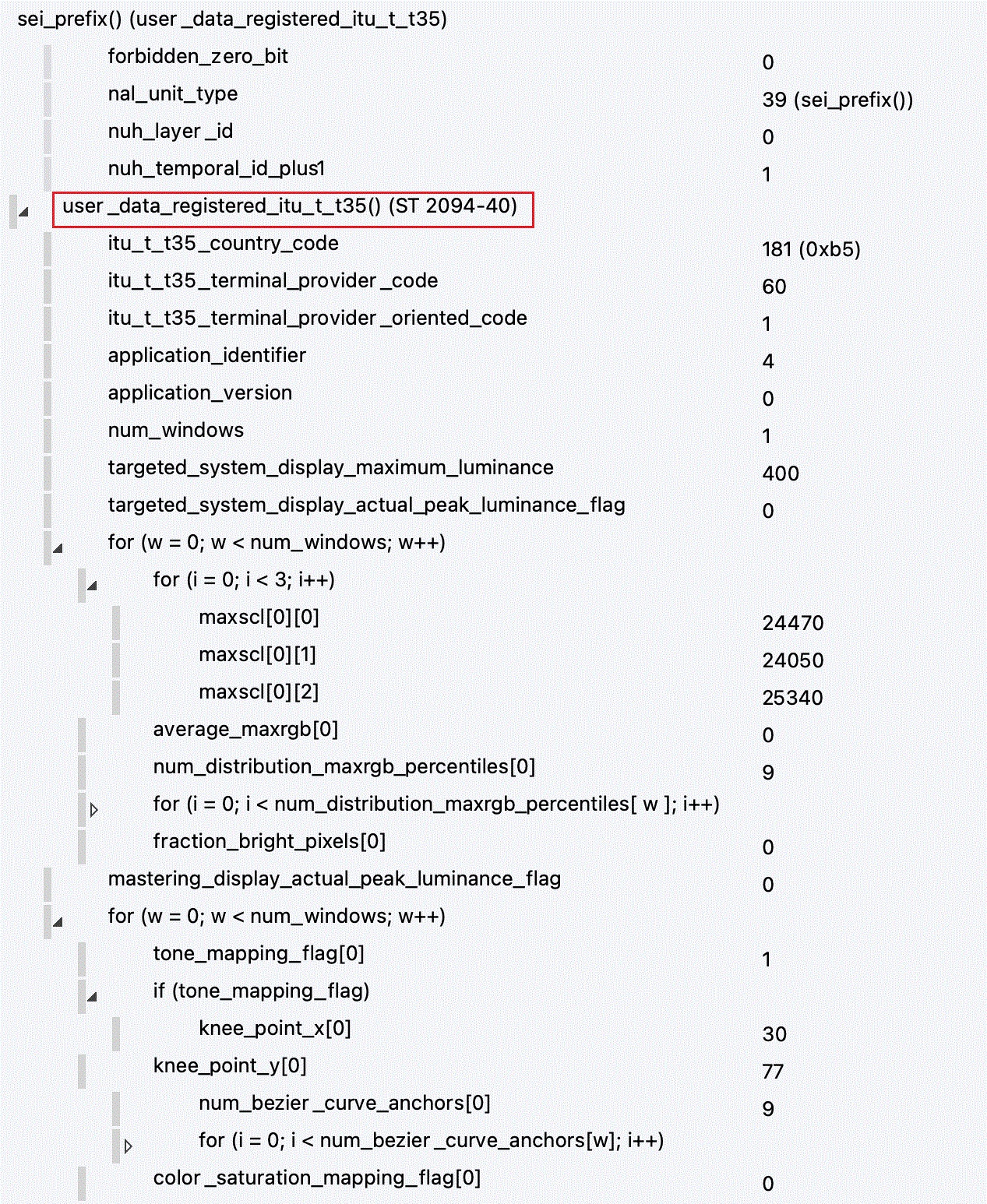

For H.264/AVC and H.265/HEVC, dynamic metadata is located at the elementary stream level in SEI user_data_registered_itu_t_t35 (Figure 10). In VP9, metadata are specified in BlockAddID (ITU-T T.35 metadata) of the WebM container. In AV1, metadata is specified in the metadata_itut_t35 () OBU syntax.

Figure 10. Dynamic metadata HDR10+, SEI message.

Dolby Vision

The most complex proprietary HDR standard developed and licensed by Dolby. An HDR standard regulating the possibility of using two layers simultaneously in one video file: base layer (BL) and enhancement layer (EL). In fact, the presence of two video layers is rare due to the large size of the video files and the difficulty in preparing and playing back such content.

Dolby Vision has 5 predefined profiles: 4, 5, 7, 8 (8.1 and 8.4) and 9.

| Supported codecs | Maximum resolution | Maximum frame rate (fps) | Color depth | Presence of two layers | Colorimetric parameters | Compatibility with other SDR/HDR standards | Description | Presence of VUI data | |

|---|---|---|---|---|---|---|---|---|---|

| Profile 41 | H.265/Hevc Main 10 profile | 3840х2160 | 60 | 10 bits | BL and EL | BT. 2020, BT.709 | BL compatible with SDR (2) | Optionally (2,2,2) | |

| Profile 5 | H.265/Hevc Main 10 profile | 7680х4320 | 120 | 10 bits | No, only | BT. 2020 | No (0) | HLS and MPEG-DASH platforms | Optionally (2,2,2) |

| Profile 7 | H.265/Hevc Main 10 profile 3840x2160 | 7680х4320 | 60 | 10 bits | BL and EL | BT. 2020 | (6) | Blu-ray Disc | Mandatory (9,16,9) for EL |

| Profile 8.1 | H.265/Hevc Main 10 profile | 7680x4320 | 120 | 10 bits | No, only | BT. 2020 | HDR10 (1) | HLS and MPEG-DASH platforms | Mandatory (9,16,9) |

| Profile 8.42 | H.265/Hevc Main 10 profile | No, only BL | BT.2020, BT.2100, BT.709 | HLG, SDR (2, 4) | HLS and MPEG-DASH platforms | Optionally (9,18,9 or 9,14,9) | |||

| Profile 9 | H.264/AVC High profile | 1920x1080 | 60 | 8 bits | No, only | BT. 709 | SDR (2) | MPEG-DASH platforms | Optionally |

1 Profile 4 is not supported for new applications and service providers.

2 Profile 8.4 is at the standardization stage. Maximum luminance level is 1000 cd/m2..

BL for profiles 5,8,9 and EL for profiles 4 and 7 use PQ EOTF, so they are not compatible with SDR displays. These profiles use dynamic metadata similar to HDR10+ metadata. This allows efficient editing of each scene during mastering and accurately convey the author’s ideas. When content is played back, the display is readjusted from scene to scene based on the dynamic metadata.

In H.264/AVC and H.265/HEVC video formats, Dolby Vision dynamic metadata is located at the elementary video level:

- In SEI user_data_registered_itu_t_t35 ST2094-10_data ();

- In NAL unit 62 at the elementary stream level.

Dolby has standardized Dolby Vision identification for the MPEG-2 transport stream and MP4 media container. In MPEG-2 TS, information is provided using DOVI Video Stream Descriptor in a PMT table, from the content of which the profile, level, presence of layers and compatibility are determined.

| BL layer compatible with SDR or HDR | BL layer incompatible with SDR and HDR | Secondary Dolby Vision PID |

|---|---|---|

stream_type in the PMT table should be: | stream_type in the PMT table must be equal to 0x06 | Stream_type in the PMT table must be equal to 0x06 |

| bl_present_flag = 1 | bl_present_flag = 1 | bl_present_flag = 0 |

| el_present_flag = profile | el_present_flag = 0 | el_present_flag set to 1 |

| dv_bl_signal_compatibility_id corresponds to BL signal compatibility ID | dv_bl_signal_compatibility_id = 0x0 | dv_bl_signal_compatibility_id corresponds to BL signal compatibility ID |

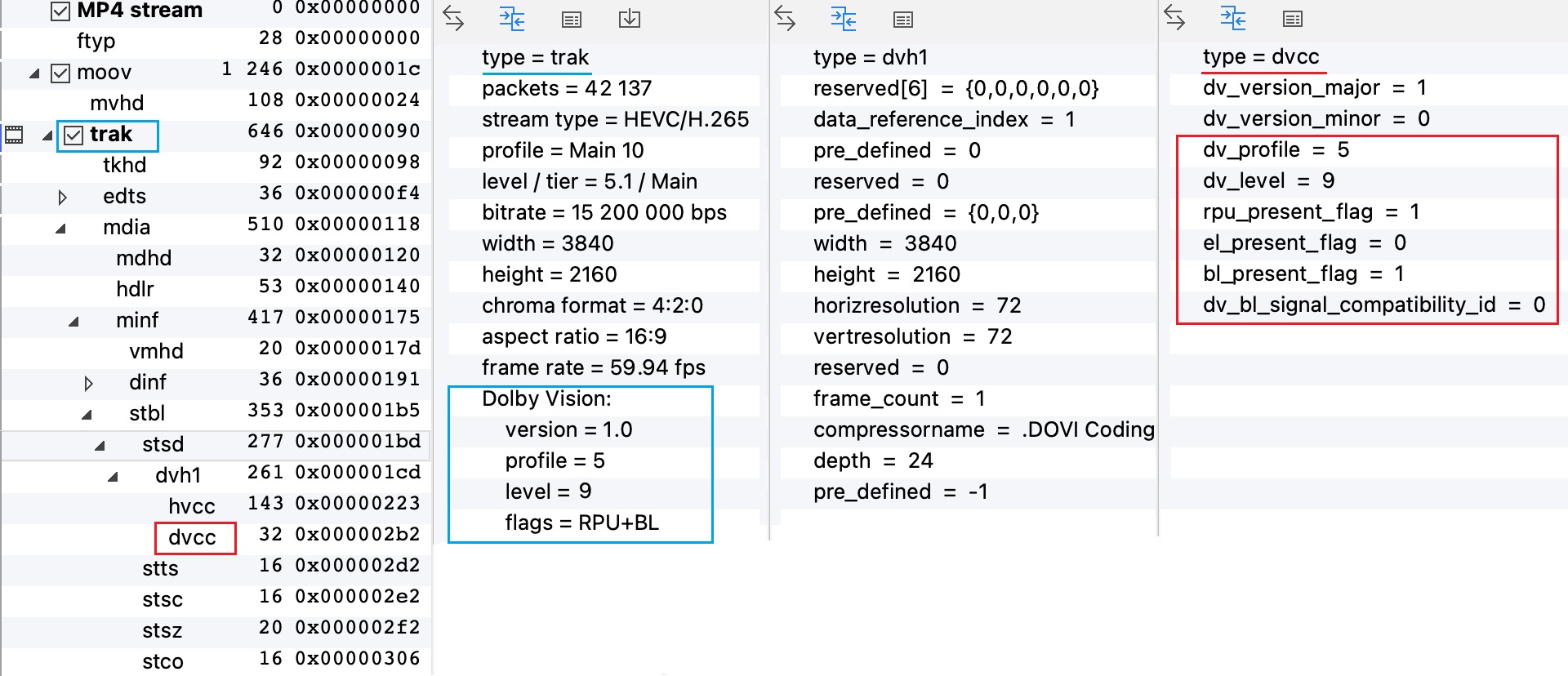

For this purpose, MP4 container uses configuration boxes: dvcc (for profiles lower than or equal to 7), dvvc (for profiles higher than 7 but lower than 10), dvwc (for profiles equal to or higher than 10 — reserved for future use).

One of the following boxes is also used:

- dvav, dva1, dvhe, dvh1, avc1, avc3, avc2, avc4, avc1, avc3, hev1, hvc1 for decoder initialization;

- avcc, hvcc to provide information about the encoder configuration;

- avce, hvce to describe EL if the 2nd video layer is present (profiles 4 and 7).

Figure 11. Dolby Vision configuration boxes from the MP4 media container.

General overview of HDR standards

| HDR 10 | HGL | HDR 10+ | Dolby Vision | |

|---|---|---|---|---|

| Video codec | H.264/AVC, H.265/HEVC, VP9, AV1 | H.264/AVC, H.265/HEVC, VP9, AV1 | H.264/AVC, H.265/HEVC, VP9, AV1 | H.264/AVC, H.265/HEVC |

| Type of HDR metadata | static | absent | dynamic | dynamic3 |

| Backward compatibility with SDR/HDR

| absent | SDR | HDR 10 | HDR10, HLG, SDR |

| EOTF | PQ | HLG | PQ | PQ, HLG |

| Colorimetric parameters | BT. 2020 | BT. 2020, BT. 2100, BT. 709 | BT. 2020 | BT. 2020, BT. 2100, BT. 709 |

| Maximum luminance level | 10,000 cd/m2 | 1,000 cd/m2 | 10,000 cd/m2 | 10,000 cd/m2 |

| Possible media container | MP4, MKV/WebM, TS | MP4, MKV/WebM, TS | MP4, MKV/WebM, TS | MP4, TS |

3 Dynamic: profiles 4 (EL), 5, 7, 8.1, 9. Absent in 8.4.

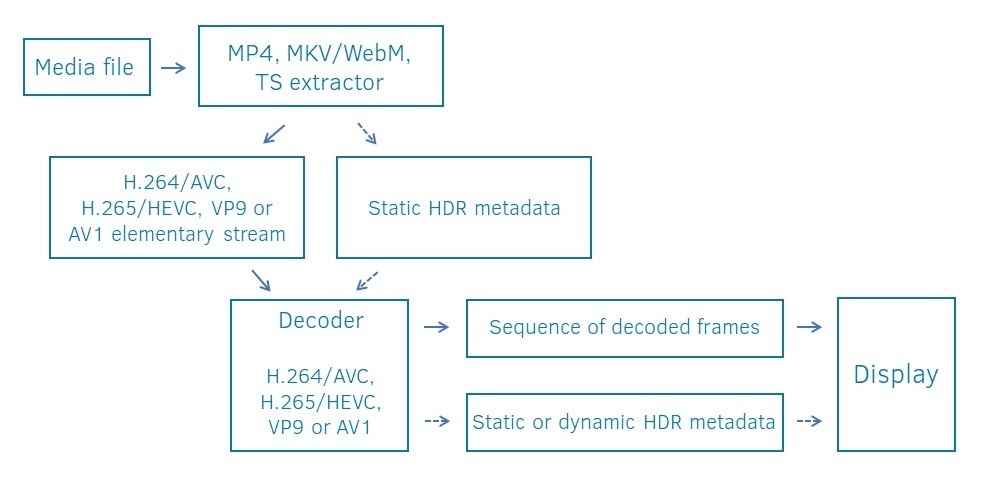

HDR content playback is performed as follows (Fig. 12):

- The application extracts elementary video and HDR metadata (if present) from MP4, MKV/WebM, TS media containers, then transfers the data to the decoder;

- The decoder decodes the video sequence and extracts static or dynamic HDR metadata, or obtains static HDR metadata about the framework from the media container;

- The decoder transmits the decoded frames and HDR metadata to the display;

- Display outputs the image.

HLG has no metadata. If there are 2 video layers (BL/EL — profiles 4 or 7 in Dolby Vision), the extractor extracts them, but the application can decide which layer and the corresponding decoder to select, depending on the platform’s capabilities.

Figure 12. The general process of HDR content playback.HDR video stream validation can be conventionally divided into several stages.

Checking the video sequence for compliance with colorimetric parameters

- BT.2020/2100 and EOTF video signal conversion functions. For all 4 video codecs (H.264/AVC, H.265/HEVC, VP9, AV1) this is standardized identical set of parameters:

- colour_primaries, indicates the chromaticity coordinates of the source primaries as specified in terms of the CIE 1931

- transfer_characteristics, indicates the reference opto-electronic transfer characteristic function of the source picture

- matrix_coeffs describes the matrix coefficients used in deriving luma and chroma signals from the green, blue, and red, or Y, Z, and X primaries

These parameters are located:

For H.264/AVC and H.265/HEVC:

- at the elementary stream level in the VUI headers: Sequence Parameter Set → VUI → video_signal_type_present_flag → colour_description_present_flag (Figure 8);

- at the MP4 media container level in avcc, hvcc or colr boxes (Figure 8);

- at the MKV/WebM media container level in the corresponding TrackEntry video and colour box.

For VP9:

- MP4: in vpcc and сolr boxes;

- MKV/WebM: colour box.

For AV1:

- at the elementary stream level in the OBU Sequence Header → color_config → if (color_description_present_flag);

- at the MP4 media container level in avc1 and colr boxes;

- at the MKV/WebM media container level in the corresponding TrackEntry video and colour box.

- Checking compliance of resolution, aspect ratio, frame rate, color depth, video codec.

- HDR metadata check.

So, in this article, we have gathered the most relevant information on each HDR format in one place. The described markers allow you to quickly dive into the HDR subject area, identify, integrate HDR content and solve possible problems.

With StreamEye you can check parameters at the elementary stream level, while Stream Analyzer helps to verify parameters both at the elementary stream and the media container levels.

References

- HDR Ecosystem Tracker fall 2020 update, available from https://www.flatpanelshd.com/focus.php?subaction=showfull&id=1606977052

- Recommendation ITU-R BT.2020-2 (10/2015) Parameter values for ultra-high definition television systems for production and international programme exchange

- SMPTE ST 2084:2014 High Dynamic Range ElectroOptical Transfer Function of Mastering Reference Displays

- SMPTE ST 2086:2014 Mastering Display Color Volume Metadata Supporting High Luminance and Wide Color Gamut Images

- CTA-861.3-A, HDR Static Metadata Extensions

- ITU-T H.264, Infrastructure of audiovisual services – Coding of moving video

- ITU-T H.265, Infrastructure of audiovisual services – Coding of moving video

- ISO/IEC 14496-12, Information technology — Coding of audio-visual objects — Part 12 : ISO base media file format

- WebM Container Guidelines, available from https://www.webmproject.org/docs/container/

- Matroska Media Container, available from https://www.matroska.org/technical/elements.html

- AV1 Bitstream & Decoding Process Specification, available from https://aomediacodec.github.io/av1-spec/av1-spec.pdf

- ARIB STD-B67, Essential Parameter Values For The Extended Image Dynamic Range Television (Eidrtv) System For Programme Production

- Recommendation ITU-R BT.2100-2 Image parameter values for high dynamic range television for use in production and international programme exchange

- HDR10+ System Whitepaper, available from https://hdr10plus.org/wp-content/uploads/2019/08/HDR10_WhitePaper.pdf

- SMPTE ST 2094-40:2016 Dynamic Metadata for Color Volume Transform — Application #4

- User_data_registered_itu_t_t35 SEI message for ST 2094-40

- Dolby Vision Profiles and Levels Version 1.3.3 Specification

- Dolby Vision Streams Within the ISO Base Media File Format Version 2.2

- Dolby Vision Streams Within the MPEG-2 Transport Stream Format

- Dolby Vision Streams within the HTTP Live Streaming format

- Dolby Vision Streams within the MPEG-DASH format

- SMPTE ST 2094-10:2016, Dynamic Metadata for Color Volume Transform – Application #1

- INTERNATIONAL APPLICATION PUBLISHED UNDER THE PATENT COOPERATION TREATY (PCT), International Application Number PCT/US20 16/059862

- ETSI TS 103 572, HDR Signalling and Carriage of Dynamic Metadata for Colour Volume Transform; Application #1 for DVB compliant systems

Author

Author

Alexander Kruglov